hadoop学习之-Sqoop与关数据库(mysql)之间导入实践

一、 Sqoop概述

Sqoop是一个用来将Hadoop和关系型数据库中的数据相互转移的工具,可以将一个关系型数据库(例如: MySQL ,Oracle ,Postgres等)中的数据导进到Hadoop的HDFS中,也可以将HDFS的数据导进到关系型数据库中。

二、 Sqoop安装配置

软件下载:

Sqoop官方版本:http://apache.dataguru.cn/sqoop/1.4.2/

Sqoop CDH版本:http://archive.cloudera.com/cdh/3/sqoop-1.2.0-CDH3B4.tar.gz

Hadoop CDH版本:http://archive.cloudera.com/cdh/3/hadoop-0.20.2-CDH3B4.tar.gz

安装环境:

--所涉及软件版本

Os version:redhad linux 5.6 64bit

Hadoop version:Hadoop-0.20.2 for linux

Sqoop version:sqoop-1.2.0-CDH3B4

Hbase version:hbase-0.90.5

Mysql version:5.5.24 MySQL Community Server (GPL) for windows 64bit

Oracle version:oracel 11.2.0.3 for linux 64bit

Hadoop架构:

主机名

IP

节点名

进程名

gc

192.168.2.100

master

namenode,jobtracker

rac1

192.168.2.101

slave

datanode,tasktracker

Rac2

192.168.2.102

slave

datanode,tasktracker

安装步骤:

1. 安装准备

之前已经安装Hadoop-0.20.2,因sqoop官方版本不支持此版本,但可使用CDH3版本,如上面的下载链接。为了测试方便,可以通过拷贝相应的包到sqoop-1.2.0-CDH3B4/lib下,依然可以使用Hadoop-0.20.2版本。

Hadoop安装参考:http://blog.csdn.net/lichangzai/article/details/8441975

2. 复制 hadoop-core-0.20.2-CDH3B4.jar到sqoop-1.2.0-CDH3B4/lib

--解压

[grid@gc ~]$ pwd

/home/grid

[grid@gc ~]$ tar xzvf sqoop-1.2.0-CDH3B4.tar.gz

[grid@gc ~]$ tar xzvf hadoop-0.20.2-CDH3B4.tar.gz

--复制

[grid@gc hadoop-0.20.2-CDH3B4]$ pwd

/home/grid/hadoop-0.20.2-CDH3B4

[grid@gc hadoop-0.20.2-CDH3B4]$ cp hadoop-core-0.20.2-CDH3B4.jar /home/grid/sqoop-1.2.0-CDH3B4/lib/

3. 复制mysql-connector-java-*.jar到sqoop-1.2.0-CDH3B4/lib

--在之前安装weblogic目录里找了此文件

[root@gc ~]# find / -name "mysql-connector-java*" -print

/home/oracle/Oracle/Middleware/wlserver_10.3/server/lib/mysql-connector-java-commercial-5.0.3-bin.jar

--复制此文件

[root@gc ~]# cd /home/oracle/Oracle/Middleware/wlserver_10.3/server/lib/

[root@gc lib]#cp mysql-connector-java-commercial-5.0.3-bin.jar /home/grid/sqoop-1.2.0-CDH3B4/lib/

[root@gc lib]# cd /home/grid/sqoop-1.2.0-CDH3B4/lib/

[root@gc lib]# chown grid:hadoop mysql-connector-java-commercial-5.0.3-bin.jar

4. 修改SQOOP的文件configure-sqoop

--注释掉hbase和zookeeper检查(除非你准备使用HABASE等HADOOP上的组件)

--否则在进行hbase和zookeeper检查时,可能会卡在这里

[grid@gc bin]$ pwd

/home/grid/sqoop-1.2.0-CDH3B4/bin

[grid@gc bin]$ vi configure-sqoop

#if [ -z "${HBASE_HOME}" ]; then

# HBASE_HOME=/usr/lib/hbase

#fi

#if [ -z "${ZOOKEEPER_HOME}" ]; then

# ZOOKEEPER_HOME=/usr/lib/zookeeper

#fi

# Check: If we can't find our dependencies, give up here.

#if [ ! -d "${HADOOP_HOME}" ]; then

# echo "Error: $HADOOP_HOME does not exist!"

# echo 'Please set $HADOOP_HOME to the root of your Hadoop installation.'

# exit 1

#fi

#if [ ! -d "${HBASE_HOME}" ]; then

# echo "Error: $HBASE_HOME does not exist!"

# echo 'Please set $HBASE_HOME to the root of your HBase installation.'

# exit 1

#fi

#if [ ! -d "${ZOOKEEPER_HOME}" ]; then

# echo "Error: $ZOOKEEPER_HOME does not exist!"

# echo 'Please set $ZOOKEEPER_HOME to the root of your ZooKeeper installation.'

# exit 1

#fi

5. 启动hadoop

--查看设置的环境变量

[grid@gc ~]$ env

HOSTNAME=gc.localdomain

SHELL=/bin/bash

HADOOP_HOME=/home/grid/hadoop-0.20.2

HISTSIZE=1000

SQOOP_HOME=/home/grid/sqoop-1.2.0-CDH3B4

OLDPWD=/home/grid/sqoop-1.2.0-CDH3B4/bin

USER=grid

PATH=/usr/kerberos/bin:/usr/local/bin:/bin:/usr/bin:/home/grid/bin:/usr/java/jdk1.6.0_18/bin:/home/grid/pig-0.9.2/bin:/home/grid/hadoop-0.20.2/bin:/home/grid/hive-0.8.1/bin:/home/grid/bin:/usr/java/jdk1.6.0_18/bin:/home/grid/pig-0.9.2/bin:/home/grid/hadoop-0.20.2/bin:/home/grid/hive-0.8.1/bin:/home/grid/sqoop-1.2.0-CDH3B4/bin

HIVE_HOME=/home/grid/hive-0.8.1

PWD=/home/grid

JAVA_HOME=/usr

PIG_CLASSPATH=/home/grid/pig-0.9.2/conf

LANG=zh_CN

HOME=/home/grid

LANGUAGE=zh_CN.GB18030:zh_CN.GB2312:zh_CN

LOGNAME=grid

--启动并查看hadoop状态

[grid@gc ~]$ cd hadoop-0.20.2/bin

[grid@gc bin]$ ./start-all.sh

[grid@gc ~]$ hadoop dfsadmin -report

Configured Capacity: 45702094848 (42.56 GB)

Present Capacity: 3436060672 (3.2 GB)

DFS Remaining: 3421020160 (3.19 GB)

DFS Used: 15040512 (14.34 MB)

DFS Used%: 0.44%

Under replicated blocks: 4

Blocks with corrupt replicas: 0

Missing blocks: 0

-------------------------------------------------

Datanodes available: 2 (2 total, 0 dead)

Name: 192.168.2.101:50010

Decommission Status : Normal

Configured Capacity: 22851047424 (21.28 GB)

DFS Used: 7520256 (7.17 MB)

Non DFS Used: 20220329984 (18.83 GB)

DFS Remaining: 2623197184(2.44 GB)

DFS Used%: 0.03%

DFS Remaining%: 11.48%

Last contact: Mon Jan 21 22:53:46 CST 2013

Name: 192.168.2.102:50010

Decommission Status : Normal

Configured Capacity: 22851047424 (21.28 GB)

DFS Used: 7520256 (7.17 MB)

Non DFS Used: 22045704192 (20.53 GB)

DFS Remaining: 797822976(760.86 MB)

DFS Used%: 0.03%

DFS Remaining%: 3.49%

Last contact: Mon Jan 21 22:53:46 CST 2013

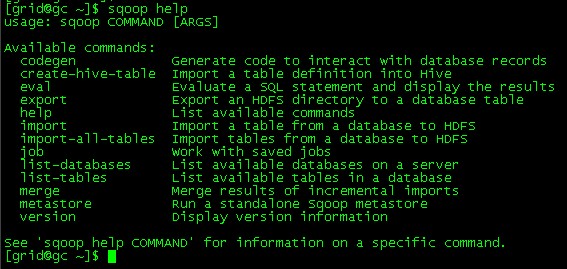

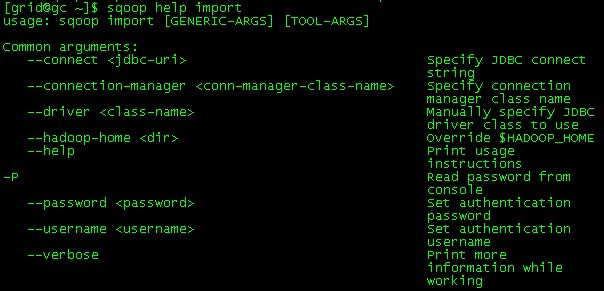

6. sqoop命令测试

三、 Sqoop与数据库导入使用

1. 从mysql导入hdfs数据的实例

mysql安装参考:http://f.dataguru.cn/thread-54367-1-1.html

1) 创建测试数据库sqoop

C:>mysql -uroot -proot

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 70

Server version: 5.5.24 MySQL Community Server (GPL)

Copyright (c) 2000, 2011, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> create database sqoop;

2) 创建sqoop专有用户

mysql> grant all privileges on *.* to 'sqoop'@'192.168.2.1' identified by 'sqoop' with grant option;

Query OK, 0 rows affected (0.00 sec)

3) 生成测试数据

mysql> use sqoop;

Database changed

mysql> create table tb1 as

-> select table_schema,table_name,table_type from information_schema.TABLES;

Query OK, 93 rows affected (0.02 sec)

Records: 93 Duplicates: 0 Warnings: 0

mysql> show tables;

+-----------------+

| Tables_in_sqoop |

+-----------------+

| tb1 |

+-----------------+

1 row in set (0.00 sec)

4) 测试sqoop与mysql连接

[grid@gc bin]$sqoop list-databases --connect jdbc:mysql://192.168.2.1:3306/ --username sqoop --password sqoop

13/01/22 05:20:28 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

13/01/22 05:20:29 INFO manager.MySQLManager: Executing SQL statement: SHOW DATABASES

information_schema

mysql

performance_schema

sakila

sqoop

test

world

参数解释:

--connect jdbc:mysql://localhost:3306/ 指定mysql数据库主机名和端口号

--username 数据库用户名

--password 数据库密码

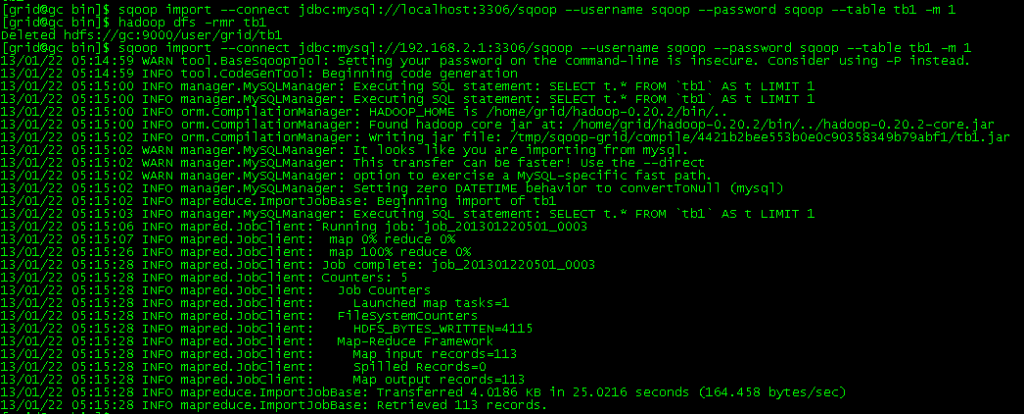

5) Mysql数据导入hdfs

--查看表

[grid@gc ~]$sqoop list-tables --connect jdbc:mysql://localhost:3306/sqoop --username sqoop --password sqoop

13/01/22 04:03:26 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

tb1

--导入

[grid@gc bin]$sqoop import --connect jdbc:mysql://192.168.2.1:3306/sqoop --username sqoop --password sqoop --table tb1 -m 1

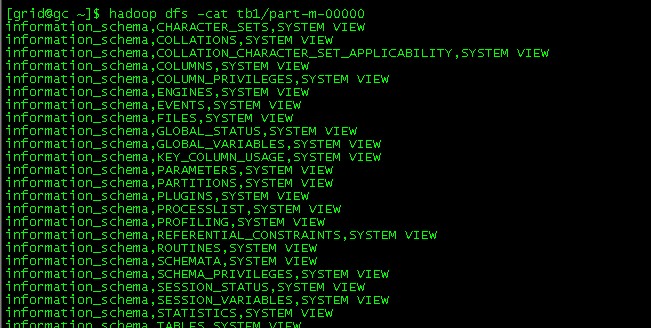

6) 查看导入的HDFS数据

[grid@gc ~]$ hadoop dfs -ls tb1

Found 2 items

drwxr-xr-x - grid supergroup 0 2013-01-22 05:15 /user/grid/tb1/_logs

-rw-r--r-- 2 grid supergroup 4115 2013-01-22 05:15 /user/grid/tb1/part-m-00000

成功完成了mysql数据到HDFS数据的导入

参考文章:

http://f.dataguru.cn/blog-303-693.html

四、 遇到的问题

问题1.

现象:

[grid@gc ~]$ sqoop list-tables --connect jdbc:mysql://localhost:3306/sqoop --username sqoop --password sqoop

13/01/22 04:03:26 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

tb1

tb2

[grid@gc ~]$ sqoop import --connect jdbc:mysql://localhost:3306/sqoop --username sqoop --password sqoop --table tb1 -m 1

13/01/22 04:04:24 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

13/01/22 04:04:24 INFO tool.CodeGenTool: Beginning code generation

13/01/22 04:04:24 INFO manager.MySQLManager: Executing SQL statement: SELECT t.* FROM `tb1` AS t LIMIT 1

13/01/22 04:04:24 INFO manager.MySQLManager: Executing SQL statement: SELECT t.* FROM `tb1` AS t LIMIT 1

13/01/22 04:04:24 INFO orm.CompilationManager: HADOOP_HOME is /home/grid/hadoop-0.20.2/bin/..

13/01/22 04:04:24 INFO orm.CompilationManager: Found hadoop core jar at: /home/grid/hadoop-0.20.2/bin/../hadoop-0.20.2-core.jar

13/01/22 04:04:27 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-grid/compile/e136911b5870cf52ff4bc631a91e7e22/tb1.jar

13/01/22 04:04:27 WARN manager.MySQLManager: It looks like you are importing from mysql.

13/01/22 04:04:27 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

13/01/22 04:04:27 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

13/01/22 04:04:27 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

13/01/22 04:04:27 INFO mapreduce.ImportJobBase: Beginning import of tb1

13/01/22 04:04:27 INFO manager.MySQLManager: Executing SQL statement: SELECT t.* FROM `tb1` AS t LIMIT 1

13/01/22 04:04:30 INFO mapred.JobClient: Running job: job_201301180622_0010

13/01/22 04:04:31 INFO mapred.JobClient: map 0% reduce 0%

13/01/22 04:04:53 INFO mapred.JobClient: Task Id : attempt_201301180622_0010_m_000000_0, Status : FAILED

java.lang.RuntimeException: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at com.cloudera.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:164)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:62)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:117)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:573)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:305)

at org.apache.hadoop.mapred.Child.main(Child.java:170)

Caused by: java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

at com.cloudera.sqoop.mapreduce.db.DBInputFormat.getConnection(DBInputFormat.java:190)

at com.cloudera.sqoop.mapreduce.db.DBInputFormat.setConf(DBInputFormat.java:159)

... 5 more

Caused by: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

分析:

在网上查,有的说是超时

但我的linux下没有:/etc/my.cnf文件。

最后还是没有找到问题的原因,最终换了一台mysql机器。导入成功

我的异常网推荐解决方案:The driver has not received any packets from the server.,http://www.myexception.cn/j2ee/345243.html