C# 通过url抓取网页~~~

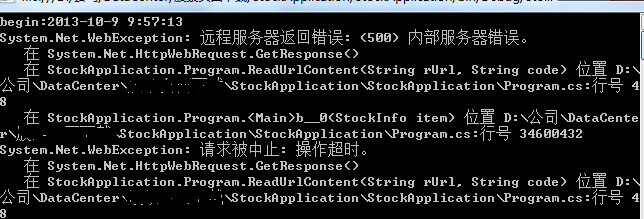

请各位大神帮我看下,我下面这段代码提示超时错误,而且效率也不是很快 我大概3000左右个文件,最好五分钟左右就能解决战斗,下面是代码和错误内容,请大家帮我看看

c# httpwebrequest url

List<StockInfo> result = cli.FindAllAs<StockInfo>().ToList();

Console.WriteLine("begin:" + DateTime.Now.ToString());

Parallel.ForEach(result, (item) =>

{

try

{

ReadUrlContent("http://dataapi.eastmoney.com:8080/bbsj/stock.aspx?code=" + item.StockCode + "", item.StockCode);

}

catch (Exception ex)

{

Console.WriteLine(ex.ToString() + item.StockCode);

}

});

Console.WriteLine("end:" + DateTime.Now.ToString());

Console.WriteLine(DateTime.Now.ToString());

public static void ReadUrlContent(string rUrl, string code)

{

byte[] buf = new byte[8192];

HttpWebRequest request = (HttpWebRequest)WebRequest.Create(rUrl);

HttpWebResponse response = (HttpWebResponse)request.GetResponse();

Stream resStream = response.GetResponseStream();

string tempString = null;

int count = 0;

using (FileStream fs = new FileStream("D:\\eastmoney\\data\\bbsj\\page\\" + code + ".html", FileMode.OpenOrCreate, FileAccess.Write))

{

do

{

count = resStream.Read(buf, 0, buf.Length);

if (resStream.Read(buf, 0, buf.Length) != 0)

{

tempString = Encoding.ASCII.GetString(buf, 0, count);

fs.Write(buf, 0, count);

}

}

while (count > 0);

resStream.Close();

fs.Close();

}

}

[解决办法]

500错误是服务器引发的,你没有办法。

可能的原因:

你提交的数据不合法

服务器有bug,导致内部错误

服务器防止数据被滥用,对你频繁的请求做了限制